Run ACCESS-CM

Warning

Important for accessdev users!

If you were an accessdev user, make sure you are a member of hr22 and ki32 projects.

Then, refer to instructions on how to Set up persistent session workflow for ACCESS-CM, and how to port suites from accessdev.

Prerequisites

General prerequisites

Before running ACCESS-CM, you need to Set Up your NCI Account.

If you are unsure whether ACCESS-CM is the right choice for your experiment, take a look at the overview of ACCESS Models.

Model-specific prerequisites

-

MOSRS account

The Met Office Science Repository Service (MOSRS) is a server run by the UK Met Office (UKMO) to support collaborative development with other partners organisations. MOSRS contains the source code and configurations for some model components in ACCESS-CM (e.g., the UM).

To apply for a MOSRS account, please contact your local institutional sponsor. -

Join the access, hr22, ki32 and ki32_mosrs projects at NCI

To join these projects, request membership on the respective access, hr22, ki32 and ki32_mosrs NCI project pages.Tip

To request membership for the ki32_mosrs subproject you need to have a MOSRS account and be member of the ki32 project.

For more information on how to join specific NCI projects, refer to How to connect to a project.

-

Connection to an ARE VDI Desktop (optional)

To run ACCESS-CM, start an Australian Research Environment (ARE) VDI Desktop session.

If you are not familiar with ARE, check out the Getting Started on ARE section.

Set up an ARE VDI Desktop (optional)

To skip this step and instead run ACCESS-CM from Gadi login node, refer to instructions on how to Set up ACCESS-CM persistent session.

Launch ARE VDI Session

Go to the ARE VDI page and launch a session with the following entries:

-

Walltime (hours) →

2

This is the amount of time the ARE VDI session will stay active for.

ACCESS-CM does not run directly on ARE.

This means that the ARE VDI session only needs to carry out setup steps as well as starting the run itself. All these tasks can be done within 2 hours. -

Queue →

normalbw -

Compute Size →

tiny(1 CPU)

As mentioned above, the ARE VDI session is only needed for setup and startup tasks, which can be easily accomplished with 1 CPU. -

Project → a project of which you are a member.

The project must have allocated Service Units (SU) to run your simulation. Usually, but not always, this corresponds to your$PROJECT.

For more information, refer to how to Join relevant NCI projects. -

Storage →

gdata/access+gdata/hh5+gdata/hr22+gdata/ki32(minimum)

This is a list of all project data storage, joined by plus (+) signs, needed for the ACCESS-CM simulation. In ARE, storage locations need to be explicitly defined to access data from within a VDI instance.

Every ACCESS-CM simulation can be unique and input data can originate from various sources. Hence, if your simulation requires data stored in project folders other thanaccess,hh5,hr22orki32, you need to add those projects to the storage path.

For example, if your ACCESS-CM simulation requires data stored in/g/data/tm70and/scratch/w40, your full storage path will be:gdata/access+gdata/hh5+gdata/hr22+gdata/ki32+gdata/tm70+scratch/w40

Launch the ARE session and, once it starts, click on Launch VDI Desktop.

Open the terminal in the VDI Desktop

Once the new tab opens, you will see a Desktop with a few folders on the left.

To open the terminal, click on the black terminal icon at the top of the window. You should now be connected to a Gadi computing node.

Set up ACCESS-CM persistent session

To support the use of long-running processes, such as ACCESS model runs, NCI provides a service on Gadi called persistent sessions.

To run ACCESS-CM, you need to start a persistent session and set it as the target session for the model run.

Start a new persistent session

To start a new persistent session on Gadi, using either a login node or an ARE terminal instance, run the following command:

persistent-sessions start <name>

This will start a persistent session with the given name that runs under your default project.

If you want to assign a different project to the persistent session, use the option -p:

persistent-sessions start -p <project> <name>

Tip

While the project assigned to a persistent session does not have to be the same as the project used to run the ACCESS-CM configuration, it does need to have allocated Service Units (SU).

For more information, check how to Join relevant NCI projects.

To list all active persistent sessions run:

persistent-sessions list

The label of a newly-created persistent session has the following format:

<name>.<$USER>.<project>.ps.gadi.nci.org.au.

Specify ACCESS-CM target persistent session

After starting the persistent session, it is essential to assign it to the ACCESS-CM run.

The easiest way to do this is to insert the persistent session label into the file ~/.persistent-sessions/cylc-session.

You can do it manually, or by running the following command (by substituting <name> with the name given to the persistent session, and <project> with the project assigned to it):

cat > ~/.persistent-sessions/cylc-session <<< "<name>.${USER}.<project>.ps.gadi.nci.org.au"

For example, if the user abc123 started a persistent session named cylc under the project xy00, the command will be:

For more information on how to specify the target session, refer to Specify Target Session with Cylc7 Suites.

Tip

You can simultaneously submit multiple ACCESS-CM runs using the same persistent session without needing to start a new one. Hence, the process of specifying the target persistent session for ACCESS-CM should only be done once.

After specifying the ACCESS-CM target persistent session the first time, to run ACCESS-CM you just need to make sure to have an active persistent session named like the specified ACCESS-CM target persistent session.

Terminate a persistent session

To stop a persistent session, run:

persistent-sessions kill <persistent-session-uuid>

Warning

When you terminate a persistent session, any model running on that session will stop. Therefore, you should check whether you have any active model runs before terminating a persistent session.

Get ACCESS-CM suite

ACCESS-CM comprises the model components UM, MOM, CICE and CABLE, coupled through OASIS. These components, which have different model parameters, input data and computer-related information, need to be packaged together as a suite in order to run.

Each ACCESS-CM suite has a suite-ID in the format u-<suite-name>, where <suite-name> is a unique identifier.

For this example you can use u-cy339, which is a pre-industrial experiment suite.

Typically, an existing suite is copied and then edited as needed for a particular run.

Copy ACCESS-CM suite with Rosie

Rosie is an SVN repository wrapper with a set of options specific for ACCESS-CM suites.

To copy an existing suite on Gadi you need to follow three main steps:

Get Cylc7 setup

To get the Cylc7 setup required to run ACCESS-CM, execute the following commands:

module use /g/data/hr22/modulefiles

module load cylc7/23.09

Warning

Make sure to load Cylc version 23.09 (or later), as earlier versions do not support the persistent sessions workflow.

Also, before loading the Cylc module, make sure to have started a persistent session and assigned it to the ACCESS-CM workflow. For more information about these steps, refer to instructions on how to Set up ACCESS-CM persistent session.

MOSRS authentication

To authenticate using your MOSRS credentials, run:

mosrs-auth

Copy a suite

-

Local-only copy

To create a local copy of the<suite-ID>from the UKMO repository, run:rosie checkout <suite-ID>rosie checkout <suite-ID> [INFO] create: /home/565/<$USER>/roses [INFO] <suite-ID>: local copy created at /home/565/<$USER>/roses/<suite-ID> -

Remote and local copy

Alternatively, to create a new copy of an existing<suite-ID>both locally and remotely in the UKMO repository, run:rosie copy <suite-ID>rosie copy <suite-ID> Copy "<suite-ID>/trunk@<trunk-ID>" to "u-?????"? [y or n (default)] y [INFO] <new-suite-ID>: created at https://code.metoffice.gov.uk/svn/roses-u/<suite-n/a/m/e/> [INFO] <new-suite-ID>: copied items from <suite-ID>/trunk@<trunk-ID> [INFO] <suite-ID>: local copy created at /home/565/<$USER>/roses/<new-suite-ID> <suite-ID>is generated within the repository and populated with descriptive information about the suite and its initial configuration.

For additional rosie options, run:

rosie help

Suites are created in the user's home directory on Gadi under ~/roses/<suite-ID>.

Each suite directory usually contains two subdirectories and three files:

app→ directory containing the configuration files for various tasks within the suite.meta→ directory containing the GUI metadata.rose-suite.conf→ main suite configuration file.rose-suite.info→ suite information file.suite.rc→ Cylc control script file (Jinja2 language).

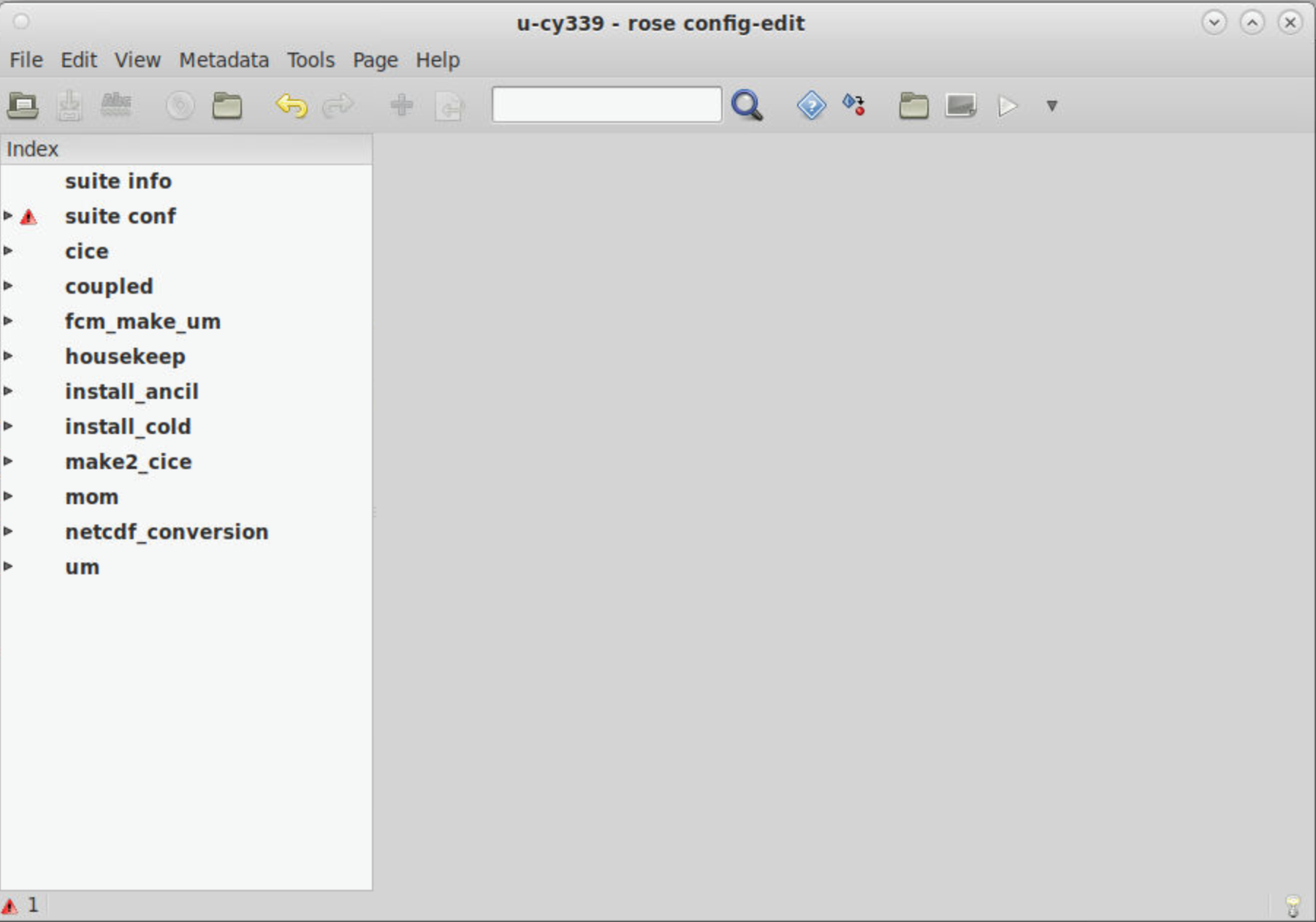

Edit ACCESS-CM suite configuration

Rose

Rose is a configuration editor which can be used to view, edit, or run an ACCESS-CM suite.

To edit a suite configuration, run the following command from within the suite directory (i.e., ~/roses/<suite-ID>) to open the Rose GUI:

rose edit &

Tip

The & is optional. It allows the terminal prompt to remain active while running the Rose GUI as a separate process in the background.

Change NCI project

To ensure that your suite is run under the correct NCI project for which you are a member, edit the Compute project field in suite conf → Machine and Runtime Options, and click the Save button  .

.

For example, to run an ACCESS-CM suite under the tm70 project (ACCESS-NRI), enter tm70 in the Compute project field:

Warning

To run ACCESS-CM, you need to be a member of a project with allocated Service Units (SU). For more information, check how to Join relevant NCI projects.

Change run length and cycling frequency

ACCESS-CM suites are often run in multiple steps, each one constituting a cycle. The job scheduler resubmits the suite every chosen Cycling frequency until the Total Run length is reached.

To modify these parameters, navigate to suite conf → Run Initialisation and Cycling, edit the respective fields (using ISO 8601 Duration format) and click the Save button  .

.

For example, to run a suite for a total of 50 years with a 1-year job resubmission, change Total Run length to P50Y and Cycling frequency to P1Y (the maximum Cycling frequency is currently two years):

Change wallclock time

The Wallclock time is the time requested by the PBS job to run a single cycle. If this time is insufficient for the suite to complete a cycle, your job will be terminated before completing the run. Hence, if you change the Cycling frequency, you may also need to change the Wallclock time accordingly. While the time required for a suite to complete a cycle depends on several factors, a good estimation is 4 hours per simulated year.

To modify the Wallclock time, edit the respective field in suite conf → Run Initialisation and Cycling (using ISO 8601 Duration format) and click the Save button  .

.

Change warm restart date

This ACCESS-CM configuration uses initial conditions from a previous ACCESS-CM simulation, a process known as warm restart.

The specific date of the initial conditions is set using the Warm restart date parameter, formatted as YYYYMMDD.

To modify the Warm restart date, edit the respective field in suite conf → Run Initialisation and Cycling and click the Save button  .

.

Run ACCESS-CM suite

ACCESS-CM suites run on Gadi through a PBS job submission.

When the suite runs, its configuration files are copied on Gadi inside /scratch/$PROJECT/$USER/cylc-run/<suite-ID> and a symbolic link to this directory is also created in the $USER's home directory under ~/cylc-run/<suite-ID>.

An ACCESS-CM suite comprises several tasks, such as checking out code repositories, compiling and building the different model components, running the model, etc. The workflow of these tasks is controlled by Cylc.

Cylc

Cylc (pronounced ‘silk’) is a workflow manager that automatically executes tasks according to the model's main cycle script suite.rc. Cylc controls how the job will be run and manages the time steps of each submodel. It also monitors all tasks, reporting any errors that may occur.

To run an ACCESS-CM suite run the following command from within the suite directory:

rose suite-run

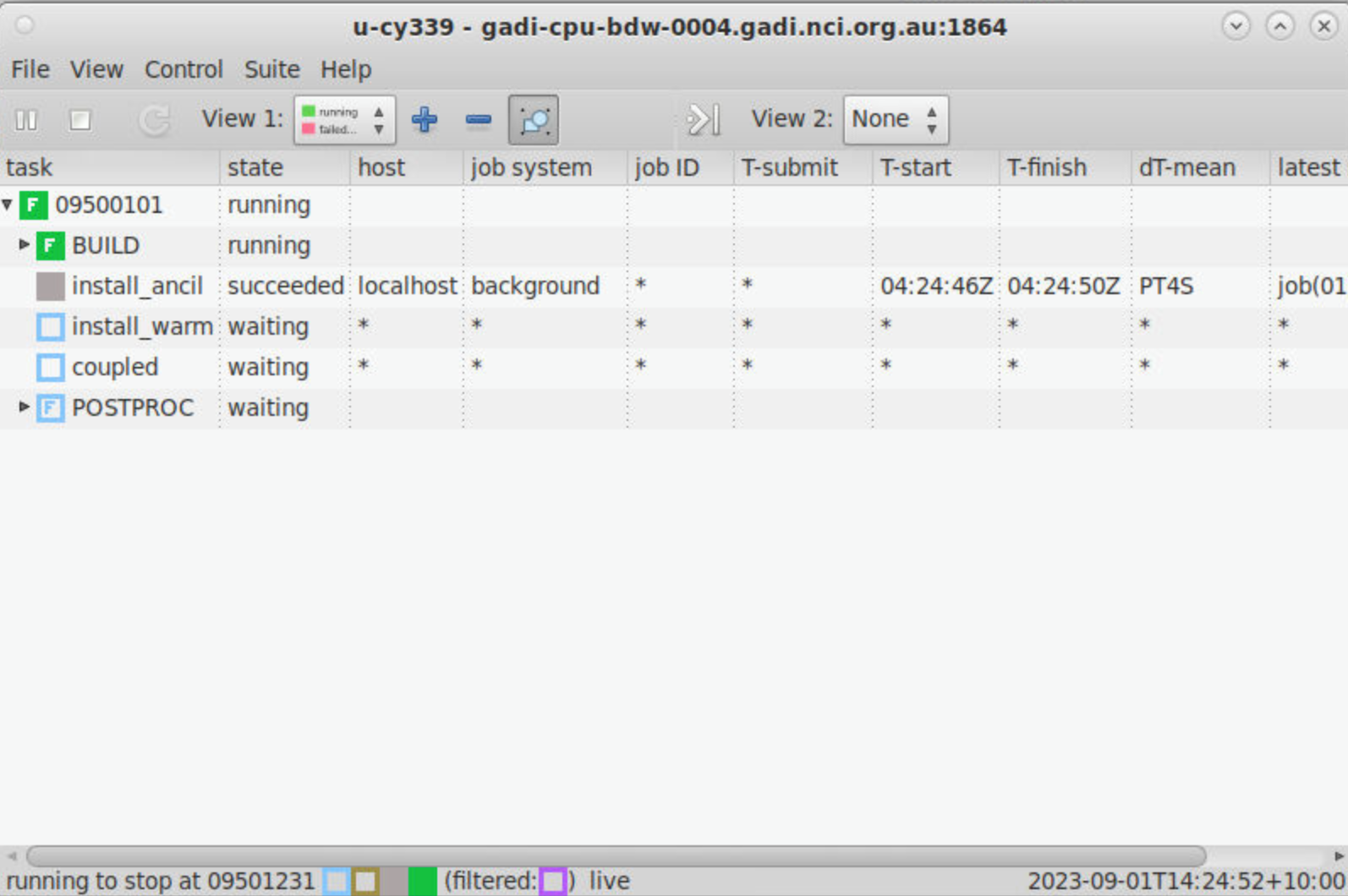

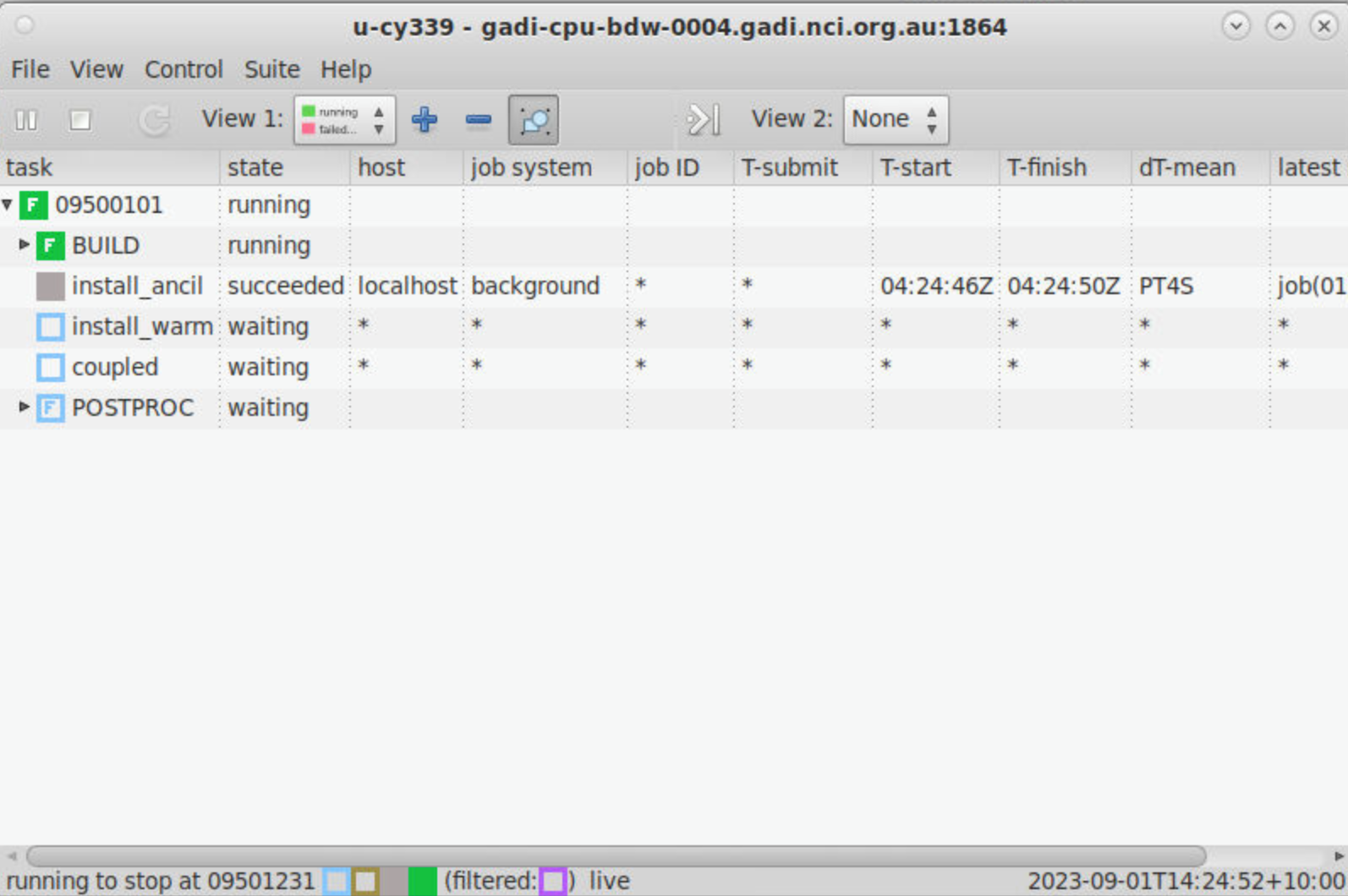

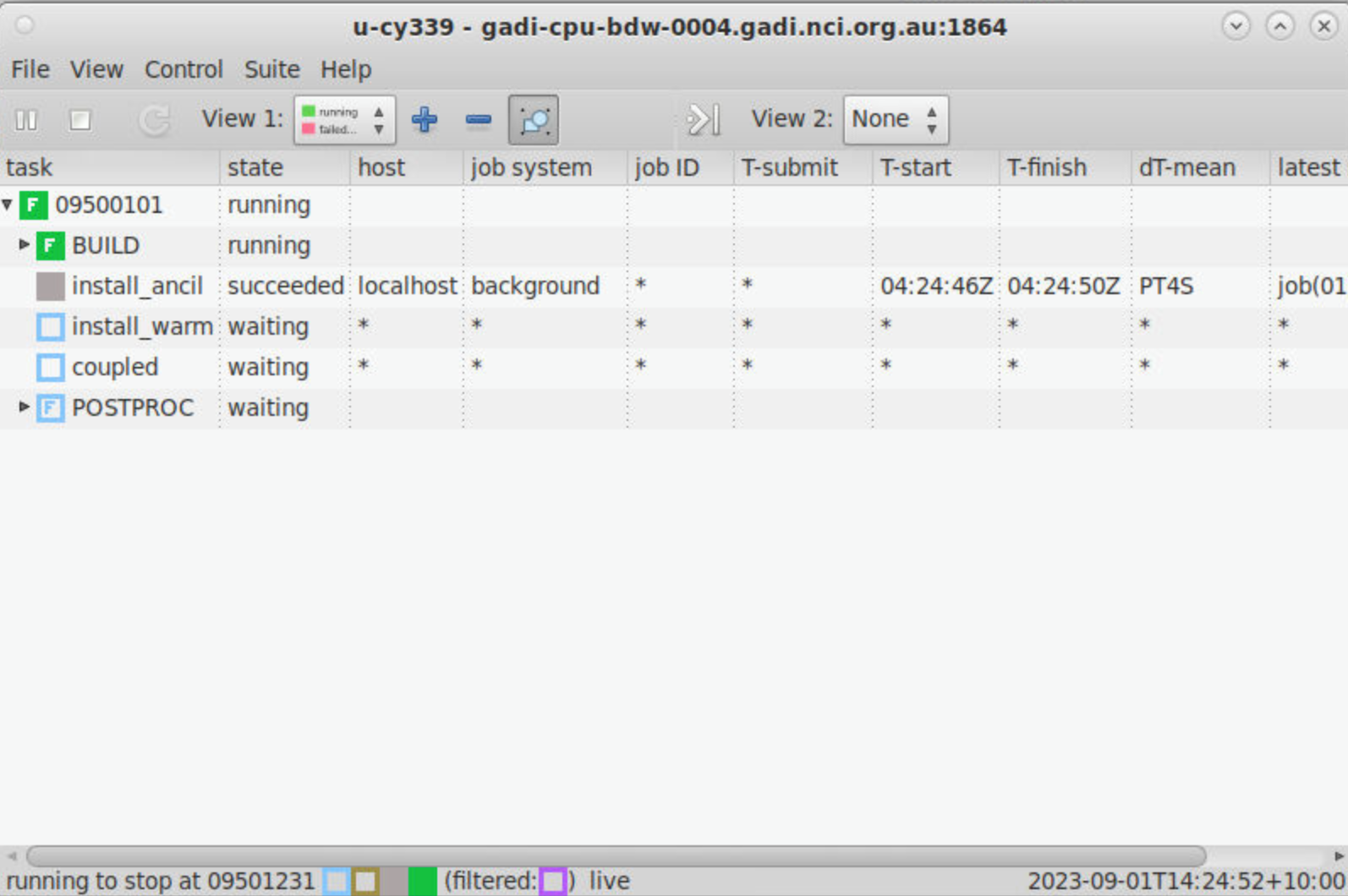

After the initial tasks are executed, the Cylc GUI will open. You can now view and control the different tasks in the suite as they are run:

cylc warranty. It is free software, you -->

-->

Warning

After running the command rose suite-run, if you get an error similar to the following:

[FAIL] Suite "<suite-ID>" appears to be running:

[FAIL] Contact info from: "/home/565/<$USER>/cylc-run/<suite-ID>/.service/contact"

[FAIL] CYLC_SUITE_HOST=<persistent-session-full-name>

[FAIL] CYLC_SUITE_OWNER=<$USER>

[FAIL] CYLC_SUITE_PORT=<port>

[FAIL] CYLC_SUITE_PROCESS=<PID> /g/data/hr22/apps/cylc7/bin/python -s /g/data/hr22/apps/cylc7/cylc_7.9.7/bin/cylc-run <suite-ID> --host=localhost

[FAIL] Try "cylc stop '<suite-ID>'" first?<suite-ID> with the suite-ID):

rm "/home/565/${USER}/cylc-run/<suite-ID>/.service/contact"

rose suite-run command again.

You are done!

If you do not get any errors, you can check the suite output files after the run is complete.

You can now close the Cylc GUI. To open it again, run the following command from within the suite directory:

rose suite-gcontrol

Monitor ACCESS-CM runs

Check for errors

It is quite common, especially during the first few runs, to experience errors and job failures. Running an ACCESS-CM suite involves the execution of several tasks, and any of these tasks could fail. When a task fails, the suite is halted and a red icon appears next to the respective task name in the Cylc GUI.

To investigate the cause of a failure, we need to look at the logs job.err and job.out from the suite run. There are two main ways to do so:

Using the Cylc GUI

Right-click on the task that failed and click on View Job Logs (Viewer) → job.err (or job.out).

To access a specific task, click on the arrow next to the task to extend the drop-down menu with all the subtasks.

Through the suite directory

The suite's log directories are stored in ~/cylc-run/<suite-ID> as log.<TIMESTAMP>, and the latest set of logs are also symlinked in the ~/cylc-run/<suite-ID>/log directory.

The logs for the main job can be found in the ~/cylc-run/<suite-ID>/log/job directory.

Logs are separated into simulation cycles according to their starting dates, then divided into subdirectories according to the task name. They are then further separated into "attempts" (consecutive failed/successful tasks), where NN is a symlink to the most recent attempt.

In the example above, a failure occurred for the 09500101 simulation cycle (i.e. starting date: 1st January 950) in the coupled task. Hence, the job.err and job.out files can be found in the ~/cylc-run/<suite-ID>/log/job/09500101/coupled/NN directory.

Model Live Diagnostics

ACCESS-NRI developed the Model Live Diagnostics framework to check, monitor, visualise, and evaluate model behaviour and progress of ACCESS models currently running on Gadi.

For a complete documentation on how to use this framework, check the Model Live Diagnostics documentation.

Stop, restart and reload suites

In some cases, you may want to control the running state of a suite.

If your Cylc GUI has been closed and you are unsure whether your suite is still running, you can scan for active suites and reopen the GUI if desired.

To scan for active suites, run:

cylc scan

rose suite-gcontrol

STOP a suite

To shutdown a suite in a safe manner, run the following command from within the suite directory:

rose suite-stop -y

-

Check the status of all your PBS jobs:

qstat -u $USER -

Delete any job related to your run:

qdel <job-ID>

RESTART a suite

There are two main ways to restart a suite:

SOFT restart

To reinstall the suite and reopen Cylc in the same state it was prior to being stopped, run the following command from within the suite directory:

rose suite-run --restart

Warning

You may need to manually trigger failed tasks from the Cylc GUI.

HARD restart

To overwrite any previous runs of the suite and start afresh, run the following command from within the suite directory:

rose suite-run --new

Warning

This will overwrite all existing model output and logs for the same suite.

RELOAD a suite

In some cases the suite needs to be updated without necessarily having to stop it (e.g., after fixing a typo in a file). Updating an active suite is called a reload, where the suite is re-installed and Cylc is updated with the changes. This is similar to a SOFT restart, except new changes are installed, so you may need to manually trigger failed tasks from the Cylc GUI.

To reload a suite, run the following command from within the suite directory:

rose suite-run --reload

ACCESS-CM output files

All ACCESS-CM output files, together with work files, are available on Gadi inside /scratch/$PROJECT/$USER/cylc-run/<suite-ID>. They are also symlinked in ~/cylc-run/<suite-ID>.

While the suite is running, files are moved between the share and work directories.

At the end of each cycle, model output data and restart files are moved to /scratch/$PROJECT/$USER/archive/<suite-name>.

This directory contains two subdirectories:

historyrestart

Output data

/scratch/$PROJECT/$USER/archive/<suite-name>/history is the directory containing the model output data, which is grouped according to each model component:

For the atmospheric output data, the files are typically a UM fieldsfile or netCDF file, formatted as <suite-name>a.p<output-stream-identifier><year><month-string>.

For the u-cy339 suite in this example, the atm directory contains:

Restart files

The restart files can be found in the /scratch/$PROJECT/$USER/archive/<suite-name>/restart directory, where they are categorised according to model components (similar to the history folder above).

The atmospheric restart files, which are UM fieldsfiles, are formatted as <suite-name>a.da<year><month><day>_00.

For the u-cy339 suite in this example, the atm directory contains:

Files formatted as <suite-name>a.xhist-<year><month><day> contain metadata information.

Port suites from accessdev

accessdev was the server used for ACCESS-CM run submission workflow before the update to persistent sessions.

If you have a suite that was running on accessdev, you can run it using persistent sessions by carrying out the following steps:

Initialisation step

To set the correct SSH configuration for Cylc, some SSH keys need to be created in the ~/.ssh directory.

To create the required SSH keys, run the following command:

/g/data/hr22/bin/gadi-cylc-setup-ps -y

Tip

You only need to run this initialisation step once.

Set host to localhost

To enable Cylc to submit PBS jobs directly from the persistent session, the suite configuration should have its host set as localhost.

You can manually set all occurrences of host to localhost in the suite configuration files.

Alternatively, you can run the following command in the suite folder:

grep -rl --exclude-dir=.svn "host\s*=" . | xargs sed -i 's/\(host\s*=\s*\).*/\1localhost/g'

Add gdata/hr22 and gdata/ki32 in the PBS storage directives

As the persistent sessions workflow uses files in the hr22 and ki32 project folders on Gadi, the respective folders need to be added to the storage directive in the suite configuration files.

You can do this manually or run the following command from within the suite directory:

grep -rl --exclude-dir=.svn "\-l\s*storage\s*=" . | xargs sed -i '/\-l\s*storage\s*=\s*.*gdata\/hr22.*/! s/\(\-l\s*storage\s*=\s*.*\)/\1+gdata\/hr22/g ; /\-l\s*storage\s*=\s*.*gdata\/ki32.*/! s/\(\-l\s*storage\s*=\s*.*\)/\1+gdata\/ki32/g'

Warning

Some suites might not be ported this way.

If you have a suite that was running on accessdev and, even after following the steps above, the run submission fails, consider getting help on the Hive Forum.